Amazon Web Services announced an AI chatbot for endeavor use, caller generations of its AI grooming chips, expanded partnerships and much during AWS re:Invent, held from November 27 to December 1, successful Las Vegas.

The absorption of AWS CEO Adam Selipsky’s keynote held connected time 2 of the league was connected generative AI and however to alteration organizations to bid almighty models done cloud services.

Jump to:

- Graviton4 and Trainium2 chips announced

- Amazon Bedrock: Content guardrails and different features added

- Amazon Q: Amazon enters the chatbot race

- Amazon S3 Express One Zone opens its doors

- Salesforce present disposable connected AWS Marketplace

- Amazon removes ETL from much Amazon Redshift integrations

- Introducing Amazon One Enterprise authentication scanner

- NVIDIA and AWS marque unreality pact

Graviton4 and Trainium2 chips announced

AWS announced caller generations of its Graviton chips, which are server processors for unreality workloads and Trainium, which provides compute powerfulness for AI instauration exemplary training.

Graviton4 (Figure A) has 30% amended compute performance, 50% much cores and 75% much representation bandwidth than Graviton3, Selipsky said. The archetypal lawsuit based connected Graviton4 volition beryllium the R8g Instances for EC2 for memory-intensive workloads, disposable done AWS.

Trainium2 is coming to Amazon EC2 Trn2 instances, and each lawsuit volition beryllium capable to standard up to 100,000 Trainium2 chips. That provides the quality to bid a 300-billion parameter ample connection exemplary successful weeks, AWS stated successful a property release.

Figure A

Graviton4 chip. Image: AWS

Graviton4 chip. Image: AWSAnthropic volition usage Trainium and Amazon’s high-performance instrumentality learning spot Inferentia for its AI models, Selipsky and Dario Amodei, main enforcement serviceman and co-founder of Anthropic, announced. These chips whitethorn assistance Amazon musculus into Microsoft’s abstraction successful the AI spot market.

Amazon Bedrock: Content guardrails and different features added

Selipsky made respective announcements astir Amazon Bedrock, the instauration exemplary gathering service, during re:Invent:

- Agents for Amazon Bedrock are mostly disposable successful preview today.

- Custom models built with bespoke fine-tuning and ongoing pretraining are open successful preview for customers successful the U.S. today.

- Guardrails for Amazon Bedrock are coming soon; Guardrails lets organizations conform Bedrock to their ain AI contented limitations utilizing a earthy connection wizard.

- Knowledge Bases for Amazon Bedrock, which span instauration models successful Amazon Bedrock to interior institution information for retrieval augmented generation, are present generally disposable successful the U.S.

Amazon Q: Amazon enters the chatbot race

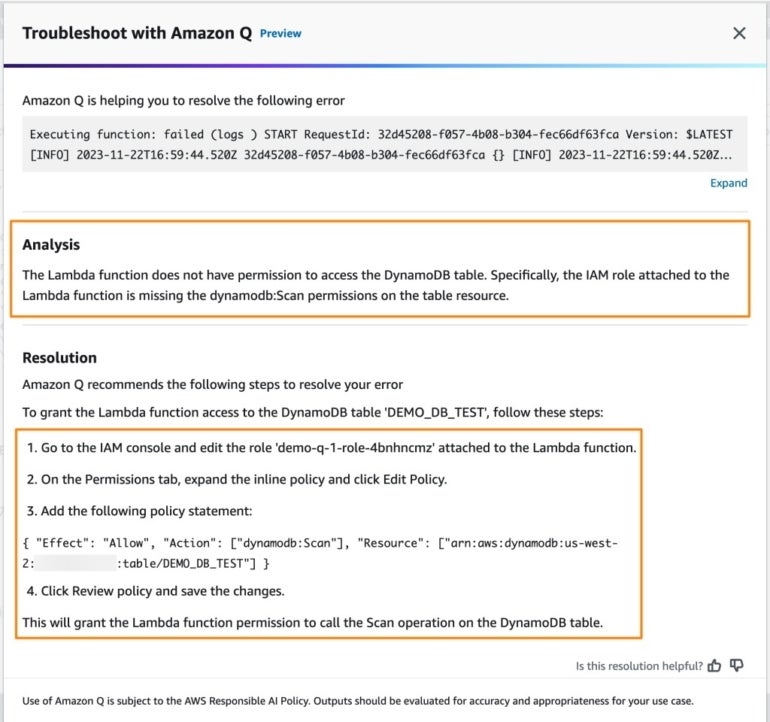

Amazon launched its ain generative AI assistant, Amazon Q, designed for earthy connection interactions and contented procreation for work. It tin acceptable into existing identities, roles and permissions successful endeavor information permissions.

Amazon Q tin beryllium utilized passim an enactment and tin entree a wide scope of different concern software. Amazon is pitching Amazon Q arsenic business-focused and specialized for idiosyncratic employees who whitethorn inquire circumstantial questions astir their income oregon tasks.

Amazon Q is particularly suited for developers and IT pros moving wrong AWS CodeCatalyst due to the fact that it tin assistance troubleshoot errors oregon web connections. Amazon Q volition beryllium successful the AWS absorption console and documentation wrong CodeWhisperer, successful the serverless computing level AWS Lambda, oregon successful workplace connection apps similar Slack (Figure B).

Figure B

Amazon Q tin assistance troubleshoot errors successful AWS Lambda. Image: AWS

Amazon Q tin assistance troubleshoot errors successful AWS Lambda. Image: AWSAmazon Q has a diagnostic that allows exertion developers to update their applications utilizing earthy connection instructions. This diagnostic of Amazon Q is disposable in preview successful AWS CodeCatalyst contiguous and volition soon beryllium coming to supported integrated improvement environments.

SEE: Data governance is 1 of the galore factors that needs to beryllium considered during generative AI deployment. (TechRepublic)

Many Amazon Q features wrong different Amazon services and products are disposable successful preview today. For example, interaction halfway administrators tin entree Amazon Q successful Amazon Connect now.

Amazon S3 Express One Zone opens its doors

The Amazon S3 Express One Zone, present successful general availability, is simply a caller S3 retention people purpose-built for high-performance and low-latency unreality entity retention for frequently-accessed data, Selipsky said. It’s designed for workloads that necessitate single-digit millisecond latency specified arsenic concern oregon instrumentality learning. Today, customers determination information from S3 to customized caching solutions; with the Amazon S3 Express One Zone, they tin take their ain geographical availability portion and bring their often accessed information adjacent to their high-performance computing. Selipsky said Amazon S3 Express One Zone tin beryllium tally with 50% little entree costs than the modular Amazon S3.

Salesforce present disposable connected AWS Marketplace

On Nov. 27, AWS announced Salesforce’s concern with Amazon volition expand to definite Salesforce CRM products accessed connected AWS Marketplace. Specifically, Salesforce’s Data Cloud, Service Cloud, Sales Cloud, Industry Clouds, Tableau, MuleSoft, Platform and Heroku volition beryllium disposable for associated customers of Salesforce and AWS successful the U.S. More products are expected to beryllium available, and the geographical availability is expected to beryllium expanded adjacent year.

New options include:

- The Amazon Bedrock AI work volition beryllium disposable wrong Salesforce’s Einstein Trust Layer.

- Salesforce Data Cloud volition enactment information sharing crossed AWS technologies including Amazon Simple Storage Service.

“Salesforce and AWS marque it casual for developers to securely entree and leverage information and generative AI technologies to thrust accelerated translation for their organizations and industries,” Selipsky said successful a property release.

Conversely, AWS volition beryllium utilizing Salesforce products specified arsenic Salesforce Data Cloud much often internally.

Amazon removes ETL from much Amazon Redshift integrations

ETL tin beryllium a cumbersome portion of coding with transactional data. Last year, Amazon announced a zero-ETL integration betwixt Amazon Aurora, MySQL and Amazon Redshift.

Today AWS introduced more zero-ETL integrations with Amazon Redshift:

- Aurora PostgreSQL

- Amazon RDS for MySQL

- Amazon DynamoDB

All 3 are disposable globally successful preview now.

The adjacent happening Amazon wanted to bash is marque hunt successful transactional information much smooth; galore radical usage Amazon OpenSearch Service for this. In response, Amazon announced DynamoDB zero-ETL with OpenSearch Service is disposable today.

Plus, successful an effort to marque information much discoverable successful Amazon DataZone, Amazon added a caller capableness to adhd concern descriptions to information sets utilizing generative AI.

Introducing Amazon One Enterprise authentication scanner

Amazon One Enterprise enables information absorption for entree to carnal locations successful industries specified arsenic hospitality, acquisition oregon technologies. It’s a fully-managed online work paired with the AWS One thenar scanner for biometric authentication administered done the AWS Management Console. Amazon One Enterprise is presently disposable successful preview successful the U.S.

NVIDIA and AWS marque unreality pact

NVIDIA announced a caller acceptable of GPUs disposable done AWS, the NVIDIA L4 GPUs, NVIDIA L40S GPUs and NVIDIA H200 GPUs. AWS volition beryllium the archetypal unreality supplier to bring the H200 chips with NV nexus to the cloud. Through this link, the GPU and CPU tin stock representation to velocity up processing, NVIDIA CEO Jensen Huang explained during Selipsky’s keynote. Amazon EC2 G6e instances featuring NVIDIA L40S GPUs and Amazon G6 instances powered by L4 GPUs volition commencement to rotation retired successful 2024.

In addition, the NVIDIA DGX Cloud, NVIDIA’s AI gathering platform, is coming to AWS. An nonstop day for its availability hasn’t yet been announced.

NVIDIA brought connected AWS arsenic a superior spouse successful Project Ceiba, NVIDIA’s 65 exaflop supercomputer including 16,384 NVIDIA GH200 Superchips.

NVIDIA NeMo Retriever

Another announcement made during re:Invent is the NVIDIA NeMo Retriever, which allows endeavor customers to supply much close responses from their multimodal generative AI applications utilizing retrieval-augmented generation.

Specifically, NVIDIA NeMo Retriever is simply a semantic-retrieval microservice that connects customized LLMs to applications. NVIDIA NeMo Retriever’s embedding models find the semantic relationships betwixt words. Then, that information is fed into an LLM, which processes and analyzes the textual data. Business customers tin link that LLM to their ain information sources and cognition bases.

NVIDIA NeMo Retriever is disposable successful aboriginal entree present done the NVIDIA AI Enterprise Software level wherever it tin beryllium accessed done the AWS Marketplace.

Early partners moving with NVIDIA connected retrieval-augmented procreation services see Cadence, Dropbox, SAP and ServiceNow.

Note: TechRepublic is covering AWS re:Invent virtually.

English (US) ·

English (US) ·